LLaMAFactory Megatron的加速(2)速度测试

目录

单机4卡训练 LLaMAFactory Megatron 速度如何?这篇做了测试。

MCA 训练

镜像kevinchina/deeplearning:llamafactory0-9-4-base-1-megatron-1-ok

单机4卡训练。

脚本:

bash展开代码model_name_or_path: /mnt/jfs6/model/Qwen3-VL-8B-Instruct/

image_max_pixels: 451584

video_max_pixels: 16384

do_train: true

stage: sft

finetuning_type: full

dataset: llava_1k_en # 或使用 mllm_demo

template: qwen3_vl_nothink

cutoff_len: 2048

trust_remote_code: true

output_dir: saves/mca/qwen3_vl_test2

per_device_train_batch_size: 1

gradient_accumulation_steps: 8

num_train_epochs: 1.0

learning_rate: 1.0e-6

lr_scheduler_type: cosine

bf16: true

flash_attn: auto

# 冻结参数配置

freeze_vision_tower: false

freeze_multi_modal_projector: false

freeze_language_model: false

# 数据加载配置

preprocessing_num_workers: 32

preprocessing_batch_size: 32

dataloader_num_workers: 32

data_shared_file_system: true

# 日志和保存配置

logging_steps: 10

plot_loss: true

save_steps: 500

save_strategy: steps

overwrite_output_dir: false

save_only_model: false

report_to: none

# SwanLab 配置

use_swanlab: true

swanlab_project: run-qwen3vl8b-1030 # 可根据需要修改

swanlab_mode: cloud

# swanlab_api_key: pM7Xvs5OS2EeXPO5gKXfJ # 建议通过环境变量设置,不要硬编码在配置文件中

# Megatron 并行配置

tensor_model_parallel_size: 1

pipeline_model_parallel_size: 4

sequence_parallel: false

bias_activation_fusion: true

apply_rope_fusion: true

use_distributed_optimizer: true

训练指令:

bash展开代码USE_MCA=1 llamafactory-cli train /mnt/s3fs/train-LlamaFactory/examples/megatron/qwen3_vl_2b_full.yaml

qwen3vl 8b MCA用时:3分钟37秒

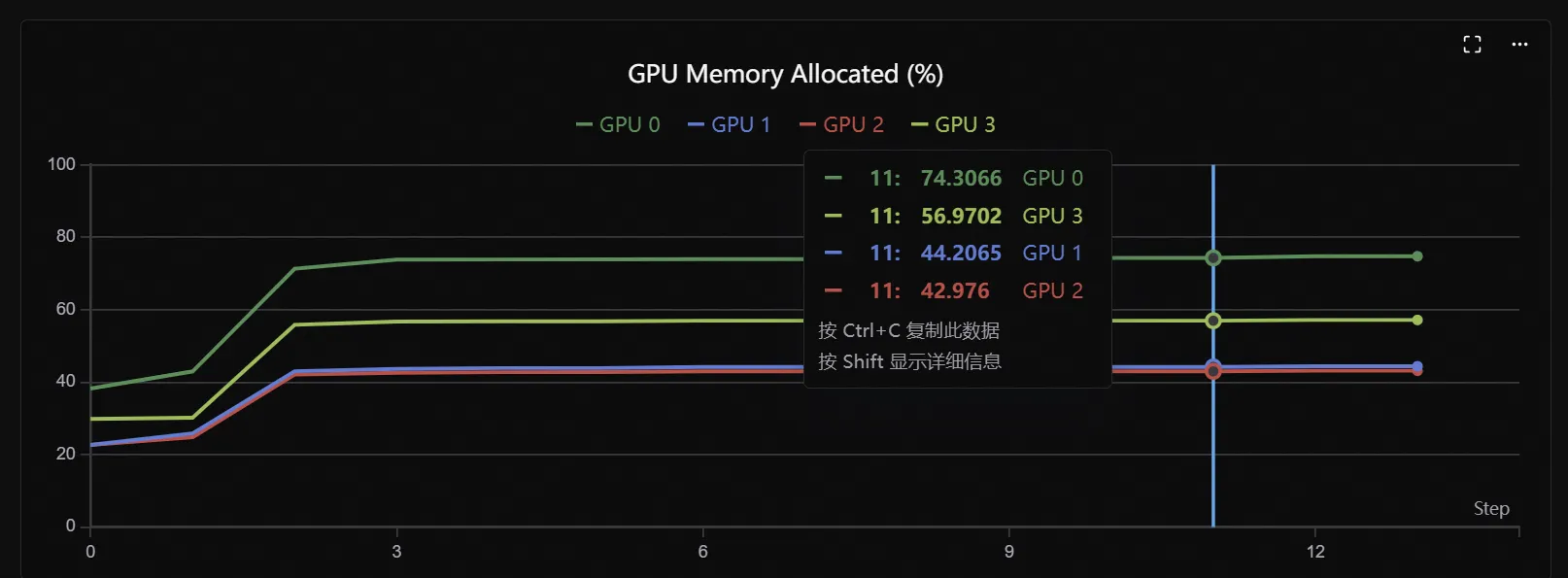

显存占用:

数据缓存:

bash展开代码# 或者清除所有缓存(如果上面不行)

rm -rf ~/.cache/huggingface/datasets/*

deepspeed

镜像:kevinchina/deeplearning

不行了,deepspeed版本不对。使用镜像kevinchina/deeplearning:llamafactory-qwen3vl-ok

bash展开代码model_name_or_path: /mnt/jfs6/model/Qwen3-VL-8B-Instruct/

image_max_pixels: 451584

video_max_pixels: 16384

do_train: true

stage: sft

finetuning_type: full

dataset: llava_1k_en # 或使用 mllm_demo

template: qwen3_vl_nothink

cutoff_len: 2048

trust_remote_code: true

output_dir: saves/mca/qwen3_vl_haha1

per_device_train_batch_size: 1

gradient_accumulation_steps: 8

num_train_epochs: 1.0

learning_rate: 1.0e-6

lr_scheduler_type: cosine

bf16: true

flash_attn: auto

# 冻结参数配置

freeze_vision_tower: false

freeze_multi_modal_projector: false

freeze_language_model: false

# 数据加载配置

preprocessing_num_workers: 32

preprocessing_batch_size: 32

dataloader_num_workers: 32

data_shared_file_system: true

# 日志和保存配置

logging_steps: 10

plot_loss: true

save_steps: 500

save_strategy: steps

overwrite_output_dir: false

save_only_model: false

report_to: none

# SwanLab 配置

use_swanlab: true

swanlab_project: run-qwen3vl8b-1030 # 可根据需要修改

swanlab_mode: cloud

# swanlab_api_key: pM7Xvs5OS2EeXPO5gKXfJ # 建议通过环境变量设置,不要硬编码在配置文件中

# --deepspeed /app/examples/deepspeed/ds_z2_config.json

deepspeed: /app/examples/deepspeed/ds_z2_config.json

bash展开代码llamafactory-cli train /mnt/s3fs/train-LlamaFactory/examples/train_full/qwen3_vl_8b_full.yaml

qwen3vl 8b deepspeed用时: 4分钟39秒

如果对你有用的话,可以打赏哦

打赏

本文作者:Dong

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 CC BY-NC。本作品采用《知识共享署名-非商业性使用 4.0 国际许可协议》进行许可。您可以在非商业用途下自由转载和修改,但必须注明出处并提供原作者链接。 许可协议。转载请注明出处!

目录