【Python】pytorch,CUDA是否可用,查看显卡显存剩余容量

CUDA可用,共有 1 个GPU设备可用。

当前使用的GPU设备索引:0

当前使用的GPU设备名称:NVIDIA T1000

GPU显存总量:4.00 GB

已使用的GPU显存:0.00 GB

剩余GPU显存:4.00 GB

PyTorch版本:1.10.1+cu102

python展开代码import torch

# 检查CUDA是否可用

cuda_available = torch.cuda.is_available()

if cuda_available:

# 获取GPU设备数量

num_gpu = torch.cuda.device_count()

# 获取当前使用的GPU索引

current_gpu_index = torch.cuda.current_device()

# 获取当前GPU的名称

current_gpu_name = torch.cuda.get_device_name(current_gpu_index)

# 获取GPU显存的总量和已使用量

total_memory = torch.cuda.get_device_properties(current_gpu_index).total_memory / (1024 ** 3) # 显存总量(GB)

used_memory = torch.cuda.memory_allocated(current_gpu_index) / (1024 ** 3) # 已使用显存(GB)

free_memory = total_memory - used_memory # 剩余显存(GB)

print(f"CUDA可用,共有 {num_gpu} 个GPU设备可用。")

print(f"当前使用的GPU设备索引:{current_gpu_index}")

print(f"当前使用的GPU设备名称:{current_gpu_name}")

print(f"GPU显存总量:{total_memory:.2f} GB")

print(f"已使用的GPU显存:{used_memory:.2f} GB")

print(f"剩余GPU显存:{free_memory:.2f} GB")

else:

print("CUDA不可用。")

# 检查PyTorch版本

print(f"PyTorch版本:{torch.__version__}")

import torch

print(f"CUDA版本:{torch.version.cuda}")

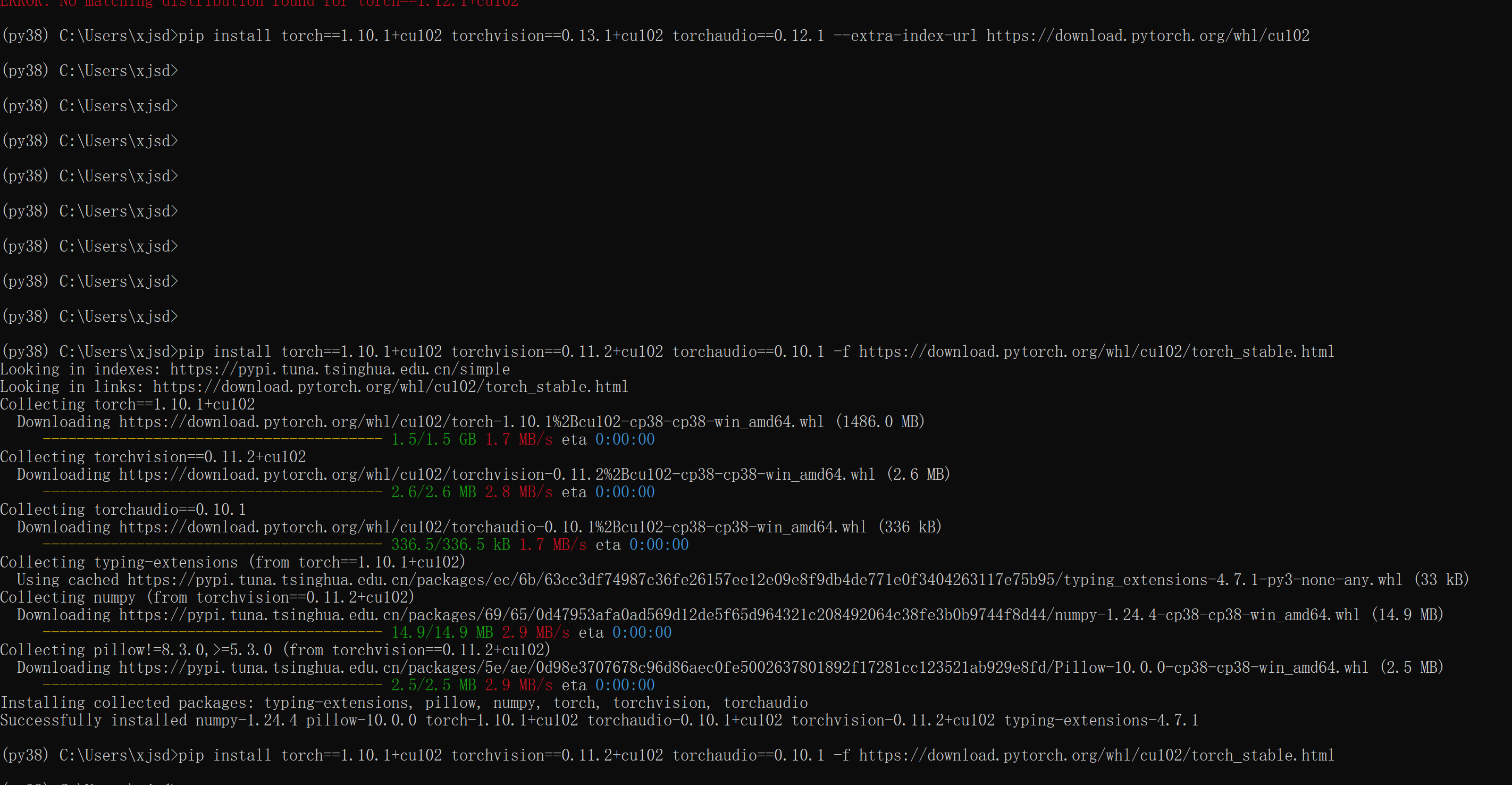

windows先装显卡驱动,再装CUDA10.2,最后装了pytorch。

pip install torch==1.10.1+cu102 torchvision==0.13.1+cu102 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu102

python展开代码import torch

import os

import subprocess

def check_gpu_availability():

print("==== Checking GPU Availability ====")

if torch.cuda.is_available():

print(f"GPU is available. Number of GPUs: {torch.cuda.device_count()}")

for i in range(torch.cuda.device_count()):

print(f"GPU {i}: {torch.cuda.get_device_name(i)}")

else:

print("No GPU available. Please check your hardware or drivers.")

return False

return True

def check_cuda_and_cudnn():

print("\n==== Checking CUDA and cuDNN Availability ====")

if torch.cuda.is_available():

print(f"CUDA version: {torch.version.cuda}")

print(f"cuDNN version: {torch.backends.cudnn.version()}")

print(f"cuDNN enabled: {torch.backends.cudnn.enabled}")

else:

print("CUDA or cuDNN not available. Please check the installation.")

def test_gpu_computation():

print("\n==== Testing Simple Computation on GPU ====")

try:

device = torch.device("cuda:2")

a = torch.randn(50, 50, device=device)

b = torch.randn(50, 50, device=device)

c = torch.matmul(a, b)

print("Matrix multiplication on GPU successful.")

except Exception as e:

print(f"Error during computation on GPU: {e}")

def check_gpu_memory():

print("\n==== Checking GPU Memory Usage ====")

try:

gpu_memory = torch.cuda.memory_summary()

print(gpu_memory)

except Exception as e:

print(f"Error retrieving GPU memory info: {e}")

def run_nvidia_smi():

print("\n==== Running nvidia-smi Command ====")

try:

# Run the nvidia-smi command and capture the output

result = subprocess.run(["nvidia-smi"], stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True)

if result.returncode == 0:

print(result.stdout)

else:

print(f"Error running nvidia-smi: {result.stderr}")

except Exception as e:

print(f"Error running nvidia-smi command: {e}")

def main():

print("==== Starting GPU Health Check ====")

# Step 1: Check GPU availability

if not check_gpu_availability():

return

# Step 2: Check CUDA and cuDNN versions

check_cuda_and_cudnn()

# Step 3: Test GPU computation

test_gpu_computation()

# Step 4: Check GPU memory usage

check_gpu_memory()

# Step 5: Run nvidia-smi to check GPU status

run_nvidia_smi()

print("\n==== GPU Health Check Completed ====")

if __name__ == "__main__":

main()

如果对你有用的话,可以打赏哦

打赏

本文作者:Dong

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 CC BY-NC。本作品采用《知识共享署名-非商业性使用 4.0 国际许可协议》进行许可。您可以在非商业用途下自由转载和修改,但必须注明出处并提供原作者链接。 许可协议。转载请注明出处!